NLR is using the Virtual Cockpit project to study the development of technology that will make it possible to build a low-cost, efficient yet high fidelity simulator based on Virtual Reality (VR). A new concept developed by NLR gives the user seamless interaction with the physical world, despite being detached from the physical environment by a VR headset. The demonstrator was displayed at the ITEC 2018 fair for military training and simulation at Stuttgart in Germany.

AR/VR technology is maturing rapidly and this trend is likely to affect the future of simulation training. Compared with traditional simulators, this technology increases flexibility, reduces the footprint and lowers costs. The main problem of VR technology is that the operator becomes fully disconnected from the physical environment, often making it impossible to see his hands and get haptic feedback on interaction. A high degree of realism is an important requirement in training for military missions (the train-as-you-fight principle). NLR believes it is possible to develop a new system that does make it possible to offer this immersive experience. It involves using off-the-shelf consumer products, together with specific physical parts smartly created, for example through the 3D printing of switches. This will allow training situations to be set up quickly and more cheaply anywhere. In this instance, the team is working on developing a virtual environment of a helicopter cockpit, but ultimately the system will be deployable for all kinds of simulations, such as other aircraft, trains and ships.

VR interaction with physical feedback

Ronald van Gimst, a senior R&D engineer at NLR, explains what the project aims to accomplish: “In a VR environment, the user sees only the images presented by the VR headset and nothing of the surrounding world. So the user doesn’t even see his own hands in the simulation and gets no haptic feedback when touching virtual objects. This is not a problem when playing computer games for fun, but it does pose a problem when you want to experience highly realistic training situations. The bottom line is that even when wearing a VR headset you want to be able to use physical instruments in a simulation to enhance its realism, because that’s extremely important in military training. Without having to think about it, you must be able to interact with your environment in a natural way.”

This latter point presents a major challenge: how do you make actions feel exactly the same as they do in the real world? It can be accomplished in various ways, but the most important thing is that the physical object must be in exactly the same place as it is in the VR visualisation. When touching a physical object in the training environment, the movement must correspond with the one that occurs in the virtual world. By way of example, Van Gimst says that if you want to pick up a coffee cup in a virtual world and the physical cup is 5 centimetres to the left, you will inevitably encounter problems.

Accurate down to the fingertips

“We’ve tried various techniques”, he says. “They include Leap Motion that makes a representation of your hand in the virtual world, sometimes referred to as a rigid body in the gaming world. Or a glove fitted with flex sensors. The sensors measure the bending of your fingers and thus present the position of the phalanxes in the VR image. We’ve also tried Inertial Measurement Units (IMUs) to measure the acceleration and position of your fingers. But none of this was accurate enough for our purposes.”

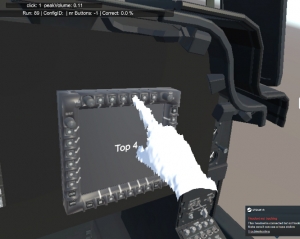

Ultimately, the team hit upon the idea of using a depth camera. “The depth camera does achieve the accuracy we need when measuring the location of the fingertips. Pressing a virtual button then exactly matches the location of the physical button in the training environment.”

The depth camera forms a point cloud that creates a depth image. A colour camera provides an image of the user’s hand, so a colour picture of the hand appears in the VR image. This latter point creates a slightly greater sense of immersion, although Van Gimst says it is not strictly necessary, because the point cloud provides sufficient feedback.

Using consumer electronics does present certain challenges. “Often you cannot use more than one device at the same time, whereas you want to connect multiple identical parts to your system, for example”, says Van Gimst. In cases like this, the team has to come up with solutions to make it work within the software.

Immersive, touch and low-cost

“The biggest wow factor is when you see that the mock-up (the physical model for the simulation) is a simple screwed-together wooden cockpit that allows you to step into the VR world where everything is in the right place and like the real thing. The objects may be fixed 3D-printed buttons, or buttons that you can press and that feel and click like a real button. They don’t need to send an electric signal to the system, because all of that happens in the VR environment through the detection of the hand’s position by the cameras.”

A final point Van Gimst mentions is that this kind of low-cost simulation will inspire others to put forward ideas that NLR has not yet even considered. “Compare it with a smartphone: ten years ago we could not have imagined all of the things we now routinely do with such a device. Most of this was not layed out by the people who put the devices in the market. This is how we are going to see the emergence of new applications of simulation.”

For more information: Ronald van Gimst

Read more: Augmented and virtual reality

Keep exploring

Need to know more? Visit our Augmented and Virtual Reality capability page.